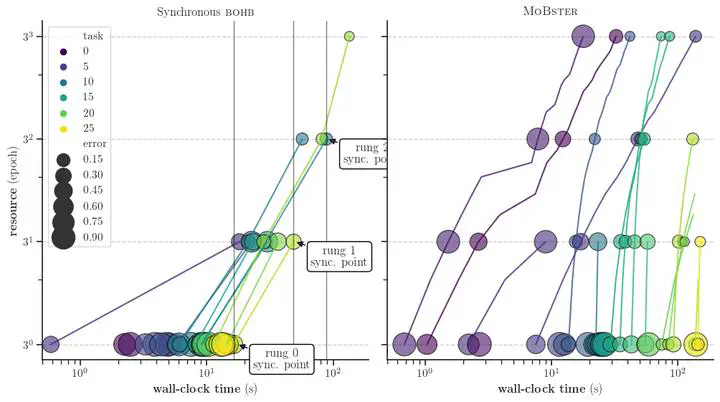

Behavior of synchronous BOHB compared to MoBSter, an asynchronous extension of BOHB based on Gaussian processes.

Behavior of synchronous BOHB compared to MoBSter, an asynchronous extension of BOHB based on Gaussian processes.Abstract

We introduce a model-based asynchronous multi-fidelity method for hyperparameter and neural architecture search that combines the strengths of asynchronous Hyperband and Gaussian process-based Bayesian optimization. At the heart of our method is a probabilistic model that can simultaneously reason across hyperparameters and resource levels, and supports decision-making in the presence of pending evaluations. We demonstrate the effectiveness of our method on a wide range of challenging benchmarks, for tabular data, image classification and language modelling, and report substantial speed-ups over current state-of-the-art methods. Our new methods, along with asynchronous baselines, are implemented in a distributed framework which will be open sourced along with this publication.